Taking Serverless to Task

We are delighted to announce the public preview of serverless tasks in Snowflake!

Snowflake tasks allow users to schedule the execution of a SQL statement. Over the last two years, we have seen a tremendous growth in tasks in Snowflake. In addition to organic growth as the Snowflake customer base and usage have grown, we have seen adoption grow as well—all resulting in a tremendous increase in daily task execution in two years. Reason: Any SQL statement, including a stored procedure, can be turned into a task easily.

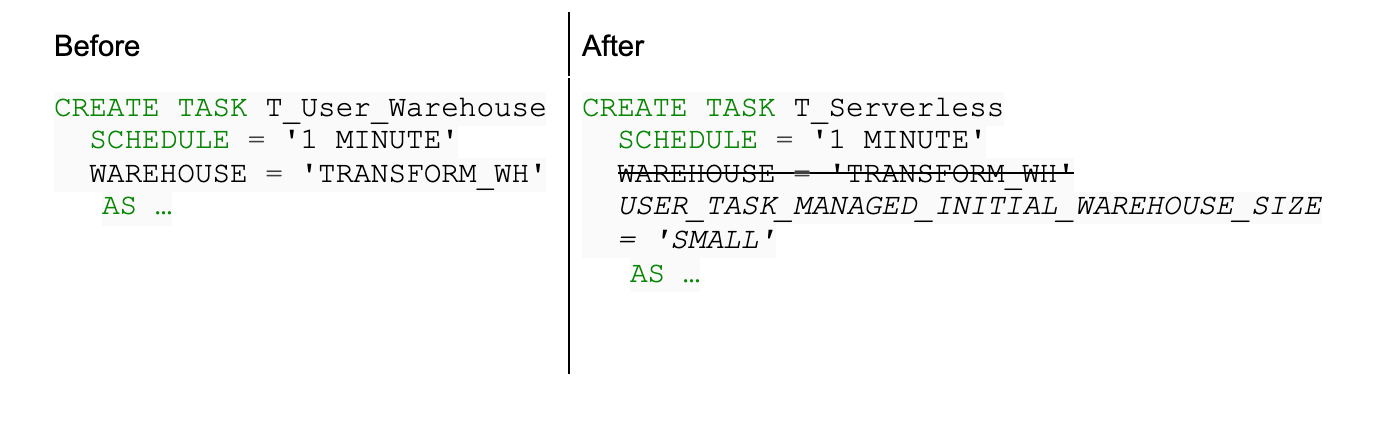

However, data engineers have to manually configure and manage pipeline tasks where they need to figure out warehouse size, idle policy, and idle time whenever they build a new pipeline. This can be time-consuming, difficult, and suboptimal, especially where there are short pipelines that run frequently.

At Snowflake, we strive to make our platform easy to use. In this case, further simplification was possible by making the warehouse optional. The work required to decide warehouse size and then optimize it for maximum utilization/efficiency can be taken up by the task execution infrastructure that can see the batch window, the degree of parallelism of the queries executed, and the historical data needed to optimize execution. This is exactly what serverless tasks do. As in the case of other serverless features such as Snowpipe, table auto-clustering, and database replication, the details of warehouses—scale, suspend, resume—are all managed by Snowflake, while users focus on getting the most out of their data instead of the execution details.

For new tasks, serverless capability is easy to use. Just omit the warehouse and optionally specify a starting size. You can also alter an existing task to run in serverless mode if you like. As you add more tasks, you don’t have to worry about how many can run on a given warehouse or if you are leaving a larger warehouse idle. So give this new capability a spin and let us know what you think on our community site. How-to questions and issues can be covered on Stack Overflow.