USE CASE

Breaking the Streaming and Batch Silos

No more separation of streaming and batch pipelines. Unified ingestion and transformation in a single system.

Simplify data pipelines in one system

Unify stream and batch ingestion and processing pipelines in one architecture. Stream and process data at low latency where your historical data is. Ingest data easily with pre-built streaming connectors via Snowflake Openflow. Use SQL to process streaming data for many use cases with Dynamic Tables.

Optimize cost without wasted compute

Streaming ingest for rowsets is as much as 50% cheaper than file ingestion at the same volume. Dynamic Tables help you avoid wasted compute by providing performance guidance with incremental or full refresh for more efficient transformations.

Take advantage of the AI Data Cloud

Secure your streaming data and AI assets via deep integrations with Snowflake Horizon Catalog to maintain the security and governance you've come to rely on.

High throughput, low latency streaming data

- Snowflake Openflow directly connects to streaming sources, including Apache Kafka† and Amazon Kinesis†, plus Kafka Sink*, so streaming data can flow into Snowflake and back to streaming systems.

- Snowpipe Streaming next-generation architecture supports up to 10 GB per second throughput per table, offering a predictable ingest-based pricing model that makes running your workflows more cost-effective at scale.

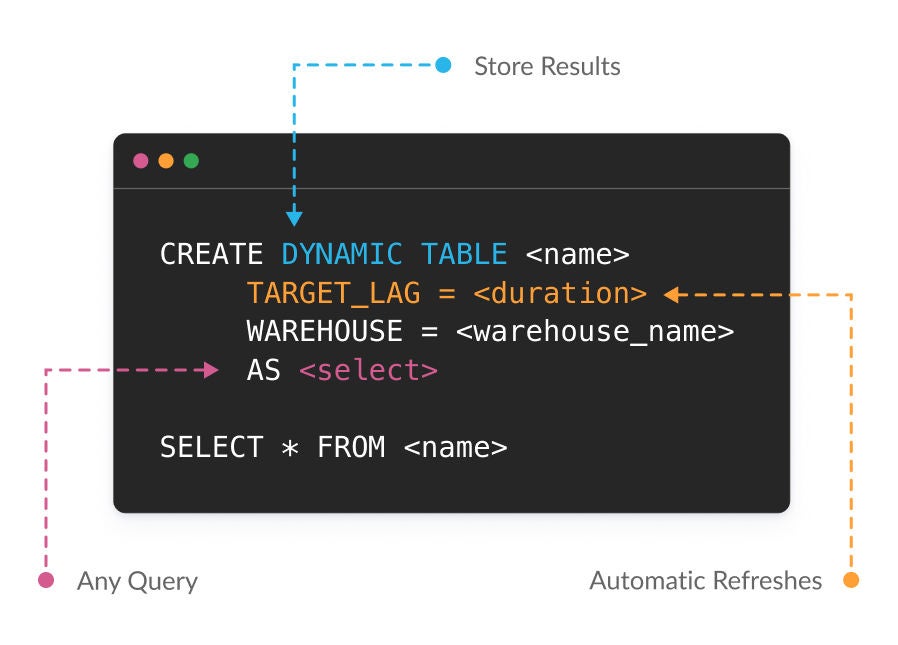

Adjust latency with a single parameter change

- With Dynamic Tables, you can use SQL or Python to declaratively define data transformations. Snowflake will manage the dependencies and automatically materialize results based on your freshness targets. Dynamic Tables only operate on data that has changed since the last refresh, making high data volumes and complex pipelines simpler and more cost-efficient.

- Easily adapt to evolving business needs by making a batch pipeline into a streaming pipeline — with a single latency parameter change.

Bring streaming to open lakehouse

- Snowflake’s streaming capabilities work with Apache Iceberg format to help you build an open lakehouse architecture easily with versatile processing options.

- Snowflake Openflow persists data in Apache Iceberg format and supports Apache Polaris-based catalogs. Then build low latency, declarative processing with Dynamic Tables for both Snowflake externally managed and unmanaged Apache Iceberg Tables.

Developer Resources

Follow along with Snowflake's Quickstart Tutorials or join an upcoming webinar to get up and running with Snowflake Dynamic Tables.